Hewlett-Packard's facial recognition technology tracks the movements of a white woman but not a black man

Particle's automatic soap dispensers failed to recognize a black guest at a Marriott hotel in Atlanta

Google Photos auto-label system identifies an image of two black people as "Gorillas" (Twitter)

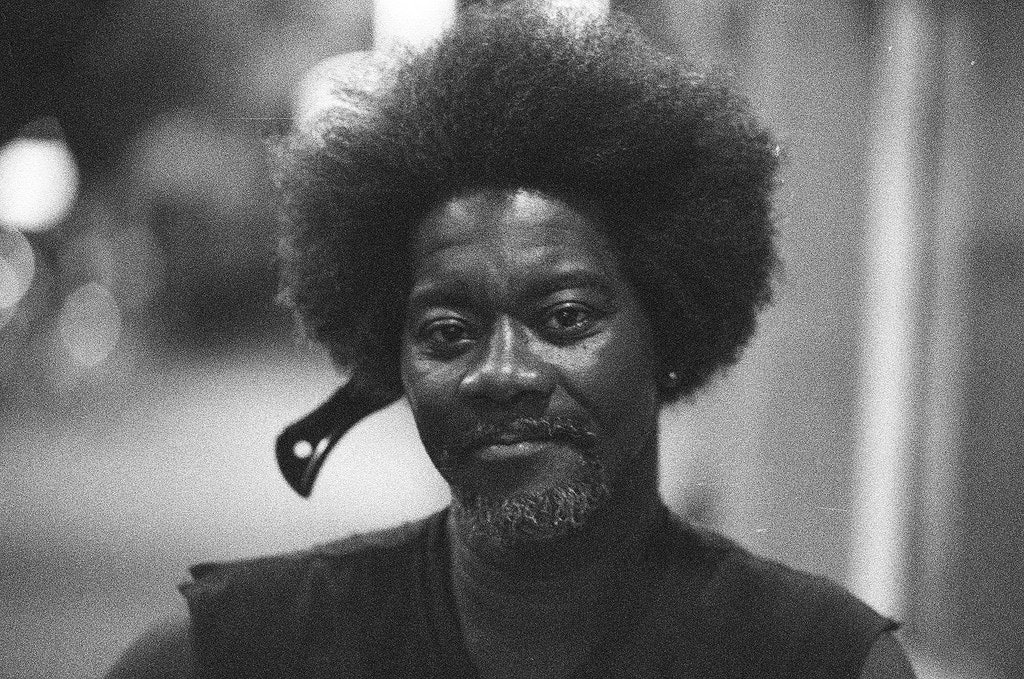

"William," by photographer Corey Deshon (Flickr)

Flickr's auto-tagging feature added the tags "ape" and "animal" that have since been removed

In his discussion of information ethics, Luciano Floridi distinguishes between moral responsibility and moral accountability. Moral responsibility requires intentions and consciousness, whereas a moral agent can be held accountable for the good or evil they can cause. According to Floridi, non-human agents, like modern technology, can be considered moral agents in the infosphere. So in these cases of misidentification, although the companies may not be morally responsible, there can still be moral accountability and a call for moral action. The absence of moral responsibility does not excuse the necessity of moral accountability.

These mishaps show a need for value sensitive design and what Philip Brey coins as the embedded values approach. When certain technologies blatantly exclude or misidentify users with racist undertones, intentional or not, the embedded values approach holds that such systems and software are not morally neutral. When technologies have moral consequences, they ought to be subjected to ethical analysis. If companies want to employ identifying software like facial recognition, they should be subject to scrutiny, whether the intentions are discriminatory or not.

Further, the above examples exemplify that technology is not neutral but shaped by society, and thus, technology has the ability to promote or demote particular values. Such massive companies with a diverse user pool should strive to promote values of diversity and inclusion. But perhaps the main difficulty is how can these values be universally accepted when racism and unconscious bias are pervasive in society?

As expected, each company released a statement explaining that some issue with the software or algorithm or suboptimal lighting led to misidentification, and there were never any deliberately racist intentions. This parallels Brey's explanation: "...it is easier to attribute built-in consequences to technological artifacts that are placed in a fixed context of use than to those that are used in many different contexts." Of course the examples provided are outdated, and I'd like to believe that no engineer is purposely excluding darker-skinned users--but blaming the intrinsic technology for these flagrant mishaps is no longer an acceptable excuse. Inevitably, there is a need to consider technology as moral agents, and stakeholders have an obligation to identify what values these technologies should promote.

This was a great read. I like both connections of Brey and Floridi, and how you laid them out. The problem of unconscious bias is truly a difficult one to address when the engineers or designers can't recognize the problem within themselves or co-workers. In an attempt to add a critique, I'd say it would be interesting to see the re-design of these technologies added to the piece, if any.

ReplyDelete